Powering AI Native Leaders, Top Startups, and the Global 1000

Serve open models in seconds

Including GLM, OpenAI, Qwen, Llama and more with an API key

Scale custom models

On dedicated capacity via a private cloud API / endpoint

Deploy on-prem for full control

Of models, data and infrastructure in your data center or private cloud

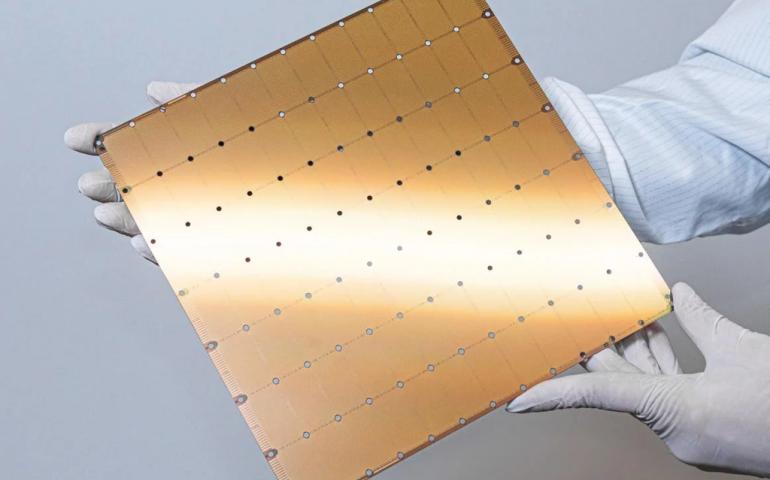

The Cerebras Advantage

Build Products that Others Can't

Instant Answers

Complex reasoning in under a second — perfect for deep search, copilots, and analysis.

Agents that never stall

Execute multi-step workflows without delays or timeouts.

Code at the speed of thought

Code, debug, and refactor instantly so developers never lose their flow.

Conversations that flow

Instant, accurate voice responses for higher quality interactions.

Customer Stories

OpenAI’s compute strategy is to build a resilient portfolio that matches the right systems to the right workloads. Cerebras adds a dedicated low-latency inference solution to our platform. That means faster responses, more natural interactions, and a stronger foundation to scale real-time AI to many more people.

By partnering with Cerebras, we are integrating cutting-edge AI infrastructure […] that allows us to deliver the unprecedented speed, most accurate and relevant insights available – helping our customers make smarter decisions with confidence.

By delivering over 2,000 tokens per second for Scout – more than 30 times faster than closed models like ChatGPT or Anthropic, Cerebras is helping developers everywhere to move faster, go deeper, and build better than ever before.

With Cerebras’ inference speed, GSK is developing innovative AI applications, such as intelligent research agents, that will fundamentally improve the productivity of our researchers and drug discovery process.

Our clinicians will be able to make more informed decisions based on genomic data, significantly reducing the time it takes to find the right treatment and – more importantly – reducing the physical toll on patients.

For Notion, productivity is everything. Cerebras gives us the instant, intelligent AI needed to power real-time features like enterprise search, and enables a faster, more seamless user experience.

Combining Cerebras’ best-in-class compute with LiveKit’s global edge network has allowed us to create AI experiences that feel more human, thanks to the system’s ultra-low latency.

We have a cancer-drug response prediction model that’s running many hundreds of times faster on that chip (Cerebras) than it runs on a conventional GPU… We are doing in a few months what would normally take a drug development process years…

Working with Cerebras lets us treat speed as a first-class design parameter. When your agent runs at ~1000 tokens per second, you have the opportunity to optimize all parts of the agent together, including context retrieval, UI, and model behavior. Cerebras enables us to pursue an entirely new set of bets, from deeper codebase understanding to fundamentally new interaction patterns.